JASP

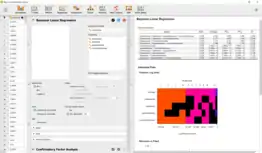

JASP (Jeffreys’s Amazing Statistics Program[1]) is a free and open-source program for statistical analysis supported by the University of Amsterdam. It is designed to be easy to use, and familiar to users of SPSS. It offers standard analysis procedures in both their classical and Bayesian form.[2][3] JASP generally produces APA style results tables and plots to ease publication. It promotes open science via integration with the Open Science Framework and reproducibility by integrating the analysis settings into the results. The development of JASP is financially supported by several universities and research funds.

| |

| Stable release | 0.17.1

/ February 13, 2023 |

|---|---|

| Repository | JASP Github page |

| Written in | C++, R, JavaScript |

| Operating system | Microsoft Windows, Mac OS X and Linux |

| Type | Statistics |

| License | GNU Affero General Public License |

| Website | jasp-stats |

Analyses

JASP offers frequentist inference and Bayesian inference on the same statistical models. Frequentist inference uses p-values and confidence intervals to control error rates in the limit of infinite perfect replications. Bayesian inference uses credible intervals and Bayes factors[4][5] to estimate credible parameter values and model evidence given the available data and prior knowledge.

The following analyses are available in JASP:

| Analysis | Frequentist | Bayesian |

|---|---|---|

| A/B test | ||

| ANOVA, ANCOVA, Repeated measures ANOVA and MANOVA | ||

| Audit | ||

| Bain | ||

| Binomial test | ||

| Confirmatory factor analysis (CFA) | ||

| Contingency tables (including Chi-squared test) | ||

| Correlation:[6] Pearson, Spearman, and Kendall | ||

| Equivalence T-Tests: Independent, Paired, One-Sample | ||

| Exploratory factor analysis (EFA) | ||

| Linear regression | ||

| Logistic regression | ||

| Log-linear regression | ||

| Machine Learning | ||

| Mann-Whitney U and Wilcoxon | ||

| Mediation Analysis | ||

| Meta Analysis | ||

| Mixed Models | ||

| Multinomial test | ||

| Network Analysis | ||

| Principal component analysis (PCA) | ||

| Reliability analyses: α, γδ, and ω | ||

| Structural equation modeling (SEM) | ||

| Summary Stats[7] | ||

| T-tests: independent, paired, one-sample | ||

| Visual Modeling: Linear, Mixed, Generalized Linear |

Other features

- Descriptive statistics.

- Assumption checks for all analyses, including Levene's test, the Shapiro–Wilk test, and Q–Q plot.

- Imports SPSS files and comma-separated files.

- Open Science Framework integration.

- Data filtering: Use either R code or a drag-and-drop GUI to select cases of interest.

- Create columns: Use either R code or a drag-and-drop GUI to create new variables from existing ones.

- Copy tables in LaTeX format.

- Plot editing, Raincloud plots.

- PDF export of results.

- Importing SQL databases (since v0.16.4)

Modules

- Audit: Planning, selection and evaluation of statistical audit samples, and methods for data auditing (e.g., Benford’s law).

- Summary statistics: Bayesian inference from frequentist summary statistics for t-test, regression, and binomial tests.

- Bain: Bayesian informative hypotheses evaluation[8] for t-tests, ANOVA, ANCOVA, linear regression and structural equation modeling.

- Network: Network Analysis allows the user to analyze the network structure of variables.

- Meta Analysis: Includes techniques for fixed and random effects analysis, fixed and mixed effects meta-regression, forest and funnel plots, tests for funnel plot asymmetry, trim-and-fill and fail-safe N analysis.

- Machine Learning: The machine Learning module contains 19 analyses for supervised an unsupervised learning:

- Regression

- Boosting Regression

- Decision Tree Regression

- K-Nearest Neighbors Regression

- Neural Network Regression

- Random Forest Regression

- Regularized Linear Regression

- Support Vector Machine Regression

- Classification

- Boosting Classification

- Decision Tree Classification

- K-Nearest Neighbors Classification

- Neural Network Classification

- Linear Discriminant Classification

- Random Forest Classification

- Support Vector Machine Classification

- Clustering

- Density-Based Clustering

- Fuzzy C-Means Clustering

- Hierarchical Clustering

- Neighborhood-based Clustering (i.e., K-Means Clustering, K-Medians clustering, K-Medoids clustering)

- Random Forest Clustering

- Regression

- SEM: Structural equation modeling.[9]

- JAGS module

- Discover distributions

- Equivalence testing

- Cochrane meta-analyses

References

- "FAQ - JASP". JASP. Retrieved 18 February 2022.

- Wagenmakers EJ, Love J, Marsman M, Jamil T, Ly A, Verhagen J, et al. (February 2018). "Bayesian inference for psychology. Part II: Example applications with JASP". Psychonomic Bulletin & Review. 25 (1): 58–76. doi:10.3758/s13423-017-1323-7. PMC 5862926. PMID 28685272.

- Love J, Selker R, Verhagen J, Marsman M, Gronau QF, Jamil T, Smira M, Epskamp S, Wil A, Ly A, Matzke D, Wagenmakers EJ, Morey MD, Rouder JN (2015). "Software to Sharpen Your Stats". APS Observer. 28 (3).

- Quintana DS, Williams DR (June 2018). "Bayesian alternatives for common null-hypothesis significance tests in psychiatry: a non-technical guide using JASP". BMC Psychiatry. 18 (1): 178. doi:10.1186/s12888-018-1761-4. PMC 5991426. PMID 29879931.

- Brydges CR, Gaeta L (December 2019). "An Introduction to Calculating Bayes Factors in JASP for Speech, Language, and Hearing Research". Journal of Speech, Language, and Hearing Research. 62 (12): 4523–4533. doi:10.1044/2019_JSLHR-H-19-0183. PMID 31830850. S2CID 209342577.

- Nuzzo RL (December 2017). "An Introduction to Bayesian Data Analysis for Correlations". PM&R. 9 (12): 1278–1282. doi:10.1016/j.pmrj.2017.11.003. PMID 29274678.

- Ly A, Raj A, Etz A, Marsman M, Gronau QF, Wagenmakers E (2017-05-30). "Bayesian Reanalyses from Summary Statistics: A Guide for Academic Consumers". Open Science Framework.

- Gu, Xin; Mulder, Joris; Hoijtink, Herbert (2018). "Approximated adjusted fractional Bayes factors: A general method for testing informative hypotheses". British Journal of Mathematical and Statistical Psychology. 71 (2): 229–261. doi:10.1111/bmsp.12110. ISSN 2044-8317. PMID 28857129.

- Kline, Rex B. (2015-11-03). Principles and Practice of Structural Equation Modeling, Fourth Edition. Guilford Publications. ISBN 9781462523351.