Attention (machine learning)

In artificial neural networks, attention is a technique that is meant to mimic cognitive attention. This effect enhances some parts of the input data while diminishing other parts — the motivation being that the network should devote more focus to the important parts of the data, even though they may be small portion of an image or sentence. Learning which part of the data is more important than another depends on the context, and this is trained by gradient descent.

| Part of a series on |

| Machine learning and data mining |

|---|

|

Attention-like mechanisms were introduced in the 1990s under names like multiplicative modules, sigma pi units, and hyper-networks.[1] Its flexibility comes from its role as "soft weights" that can change during runtime, in contrast to standard weights that must remain fixed at runtime. Uses of attention include memory in fast weight controllers[2] that can learn "internal spotlights of attention"[3] (also known as transformers with "linearized self-attention"[4][5]), neural Turing machines, reasoning tasks in differentiable neural computers,[6] language processing in transformers, and LSTMs, and multi-sensory data processing (sound, images, video, and text) in perceivers. [7][8][9][10]

Approach

Correlating the different parts within a sentence or a picture can help capture its structure. The attention scheme gives a neural network an opportunity to do that. For example, in the sentence "See that girl run.", when the network processes "that" we want it to know that this word refers to "girl". The next diagram shows how a well trained network can make this happen.

- X is the input matrix of word embeddings, size 4 x 300. x is the word vector for "that".

- The attention head consists of 3 neural networks to be trained. Each has 100 neurons with a weight matrix sized 300 x 100.

- (*) this calculation is softmax( qKT / sqrt(100) ), without V. Rescaling by sqrt(100) prevents a high variance in qKT that would allow a single word to excessively dominate the softmax resulting in attention to only one word, as a discrete hard max would do.In practice, instead of computing the Context for each word individually as was done for "that", we would do it for all words in the sentence in parallel. For the example here, this would add a second dimension of size 4 to each of these vectors: x, q, soft weights, and context. This is much faster than the sequential operations of recurrent networks used before Attention.

Notation: the commonly written row-wise softmax formula here assumes that vectors are rows, which contradicts the standard math notation of column vectors. Strictly speaking, we should take the transpose of the context vector and use the column-wise softmax, resulting in the more correct form

Context = (XVW)T * softmax( (KW XT) * (xQw)T / sqrt(100) ).

A language translation example

To build a machine that translates English to French, an attention unit is grafted to the basic Encoder-Decoder (diagram below). In the simplest case, the attention unit consists of dot products of the recurrent encoder states and does not need training. In practice, the attention unit consists of 3 trained, fully-connected neural network layers called query, key, and value.

| Label | Description |

|---|---|

| 100 | Max. sentence length |

| 300 | Embedding size (word dimension) |

| 500 | Length of hidden vector |

| 9k, 10k | Dictionary size of input & output languages respectively. |

| x, Y | 9k and 10k 1-hot dictionary vectors. x → x implemented as a lookup table rather than vector multiplication. Y is the 1-hot maximizer of the linear Decoder layer D; that is, it takes the argmax of D's linear layer output. |

| x | 300-long word embedding vector. The vectors are usually pre-calculated from other projects such as GloVe or Word2Vec. |

| h | 500-long encoder hidden vector. At each point in time, this vector summarizes all the preceding words before it. The final h can be viewed as a "sentence" vector, or a thought vector as Hinton calls it. |

| s | 500-long decoder hidden state vector. |

| E | 500 neuron RNN encoder. 500 outputs. Input count is 800–300 from source embedding + 500 from recurrent connections. The encoder feeds directly into the decoder only to initialize it, but not thereafter; hence, that direct connection is shown very faintly. |

| D | 2-layer decoder. The recurrent layer has 500 neurons and the fully-connected linear layer has 10k neurons (the size of the target vocabulary).[11] The linear layer alone has 5 million (500 × 10k) weights -- ~10 times more weights than the recurrent layer. |

| score | 100-long alignment score |

| w | 100-long vector attention weight. These are "soft" weights which changes during the forward pass, in contrast to "hard" neuronal weights that change during the learning phase. |

| A | Attention module — this can be a dot product of recurrent states, or the query-key-value fully-connected layers. The output is a 100-long vector w. |

| H | 500×100. 100 hidden vectors h concatenated into a matrix |

| c | 500-long context vector = H * w. c is a linear combination of h vectors weighted by w. |

Viewed as a matrix, the attention weights show how the network adjusts its focus according to context.

| I | love | you | |

| je | 0.94 | 0.02 | 0.04 |

| t' | 0.11 | 0.01 | 0.88 |

| aime | 0.03 | 0.95 | 0.02 |

This view of the attention weights addresses the neural network "explainability" problem. Networks that perform verbatim translation without regard to word order would show the highest scores along the (dominant) diagonal of the matrix. The off-diagonal dominance shows that the attention mechanism is more nuanced. On the first pass through the decoder, 94% of the attention weight is on the first English word "I", so the network offers the word "je". On the second pass of the decoder, 88% of the attention weight is on the third English word "you", so it offers "t'". On the last pass, 95% of the attention weight is on the second English word "love", so it offers "aime".

Variants

Many variants of attention implement soft weights, such as

- "internal spotlights of attention"[3] generated by fast weight programmers or fast weight controllers (1992)[2] (also "linearized self-attention"[4][5]). A slow neural network learns by gradient descent to program the fast weights of another neural network through outer products of self-generated activation patterns called "FROM" and "TO" which in transformer terminology are called "key" and "value." This fast weight "attention mapping" is applied to queries.

- Bahdanau Attention,[12] also referred to as additive attention,

- Luong Attention [13] which is known as multiplicative attention, built on top of additive attention,

- highly parallelizable self-attention introduced in 2016 as decomposable attention[14] and successfully used in transformers a year later.

For convolutional neural networks, attention mechanisms can be distinguished by the dimension on which they operate, namely: spatial attention,[15] channel attention,[16] or combinations.[17][18]

These variants recombine the encoder-side inputs to redistribute those effects to each target output. Often, a correlation-style matrix of dot products provides the re-weighting coefficients.

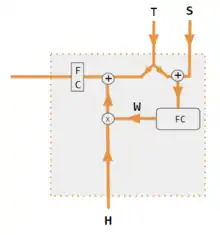

| 1. encoder-decoder dot product | 2. encoder-decoder QKV | 3. encoder-only dot product | 4. encoder-only QKV | 5. Pytorch tutorial |

|---|---|---|---|---|

Both encoder & decoder are needed to calculate attention.[13] |

Both encoder & decoder are needed to calculate attention.[19] |

Decoder is not used to calculate attention. With only 1 input into corr, W is an auto-correlation of dot products. wij = xi xj[20] |

Decoder is not used to calculate attention.[21] |

A fully-connected layer is used to calculate attention instead of dot product correlation.[22] |

| Label | Description |

|---|---|

| Variables X, H, S, T | Upper case variables represent the entire sentence, and not just the current word. For example, H is a matrix of the encoder hidden state—one word per column. |

| S, T | S, decoder hidden state; T, target word embedding. In the Pytorch Tutorial variant training phase, T alternates between 2 sources depending on the level of teacher forcing used. T could be the embedding of the network's output word; i.e. embedding(argmax(FC output)). Alternatively with teacher forcing, T could be the embedding of the known correct word which can occur with a constant forcing probability, say 1/2. |

| X, H | H, encoder hidden state; X, input word embeddings. |

| W | Attention coefficients |

| Qw, Kw, Vw, FC | Weight matrices for query, key, vector respectively. FC is a fully-connected weight matrix. |

| ⊕, ⊗ | ⊕, vector concatenation; ⊗, matrix multiplication. |

| corr | Column-wise softmax(matrix of all combinations of dot products). The dot products are xi* xj in variant #3, hi* sj in variant 1, and columni ( Kw* H )* column j ( Qw* S ) in variant 2, and column i (Kw* X)* column j (Qw* X) in variant 4. Variant 5 uses a fully-connected layer to determine the coefficients. If the variant is QKV, then the dot products are normalized by the sqrt(d) where d is the height of the QKV matrices. |

Self-attention

Self-attention has gained currency given its use in transformer-based large language models. It captures dependencies between the different parts of a (single) sequence. The term "self" signals that attention is contained within a single sequence, without engaging other sequences. The model can capture dependencies across the entire sequence, without requiring fixed or sliding windows that consider only part of the sequence at a time. Self-attention is computationally efficient compared to recurrent neural networks, which process sequences sequentially.[23]

See also

- Transformer (machine learning model) § Scaled dot-product attention

- Perceiver § Components for query-key-value (QKV) attention

References

- Yann Lecun (2020). Deep Learning course at NYU, Spring 2020, video lecture Week 6. Event occurs at 53:00. Retrieved 2022-03-08.

- Schmidhuber, Jürgen (1992). "Learning to control fast-weight memories: an alternative to recurrent nets". Neural Computation. 4 (1): 131–139.

- Schmidhuber, Jürgen (1993). "Reducing the ratio between learning complexity and number of time-varying variables in fully recurrent nets". ICANN 1993. Springer. pp. 460–463.

- Schlag, Imanol; Irie, Kazuki; Schmidhuber, Jürgen (2021). "Linear Transformers Are Secretly Fast Weight Programmers". ICML 2021. Springer. pp. 9355–9366.

- Choromanski, Krzysztof; Likhosherstov, Valerii; Dohan, David; Song, Xingyou; Gane, Andreea; Sarlos, Tamas; Hawkins, Peter; Davis, Jared; Mohiuddin, Afroz; Kaiser, Lukasz; Belanger, David; Colwell, Lucy; Weller, Adrian (2020). "Rethinking Attention with Performers". arXiv:2009.14794 [cs.CL].

- Graves, Alex; Wayne, Greg; Reynolds, Malcolm; Harley, Tim; Danihelka, Ivo; Grabska-Barwińska, Agnieszka; Colmenarejo, Sergio Gómez; Grefenstette, Edward; Ramalho, Tiago; Agapiou, John; Badia, Adrià Puigdomènech; Hermann, Karl Moritz; Zwols, Yori; Ostrovski, Georg; Cain, Adam; King, Helen; Summerfield, Christopher; Blunsom, Phil; Kavukcuoglu, Koray; Hassabis, Demis (2016-10-12). "Hybrid computing using a neural network with dynamic external memory". Nature. 538 (7626): 471–476. Bibcode:2016Natur.538..471G. doi:10.1038/nature20101. ISSN 1476-4687. PMID 27732574. S2CID 205251479.

- Vaswani, Ashish; Shazeer, Noam; Parmar, Niki; Uszkoreit, Jakob; Jones, Llion; Gomez, Aidan N.; Kaiser, Lukasz; Polosukhin, Illia (2017-12-05). "Attention Is All You Need". arXiv:1706.03762 [cs.CL].

- Ramachandran, Prajit; Parmar, Niki; Vaswani, Ashish; Bello, Irwan; Levskaya, Anselm; Shlens, Jonathon (2019-06-13). "Stand-Alone Self-Attention in Vision Models". arXiv:1906.05909 [cs.CV].

- Jaegle, Andrew; Gimeno, Felix; Brock, Andrew; Zisserman, Andrew; Vinyals, Oriol; Carreira, Joao (2021-06-22). "Perceiver: General Perception with Iterative Attention". arXiv:2103.03206 [cs.CV].

- Ray, Tiernan. "Google's Supermodel: DeepMind Perceiver is a step on the road to an AI machine that could process anything and everything". ZDNet. Retrieved 2021-08-19.

- "Pytorch.org seq2seq tutorial". Retrieved December 2, 2021.

- Bahdanau, Dzmitry (2016-05-19). "Neural Machine Translation by Jointly Learning to Align and Translate". arXiv:1409.0473 [cs.CL].

- Luong, Minh-Thang (2015-09-20). "Effective Approaches to Attention-based Neural Machine Translation". arXiv:1508.04025v5 [cs.CL].

- "Papers with Code - A Decomposable Attention Model for Natural Language Inference". paperswithcode.com.

- Zhu, Xizhou; Cheng, Dazhi; Zhang, Zheng; Lin, Stephen; Dai, Jifeng (2019). "An Empirical Study of Spatial Attention Mechanisms in Deep Networks". 2019 IEEE/CVF International Conference on Computer Vision (ICCV): 6687–6696. arXiv:1904.05873. doi:10.1109/ICCV.2019.00679. ISBN 978-1-7281-4803-8. S2CID 118673006.

- Hu, Jie; Shen, Li; Sun, Gang (2018). "Squeeze-and-Excitation Networks". IEEE/CVF Conference on Computer Vision and Pattern Recognition: 7132–7141. arXiv:1709.01507. doi:10.1109/CVPR.2018.00745. ISBN 978-1-5386-6420-9. S2CID 206597034.

- Woo, Sanghyun; Park, Jongchan; Lee, Joon-Young; Kweon, In So (2018-07-18). "CBAM: Convolutional Block Attention Module". arXiv:1807.06521 [cs.CV].

- Georgescu, Mariana-Iuliana; Ionescu, Radu Tudor; Miron, Andreea-Iuliana; Savencu, Olivian; Ristea, Nicolae-Catalin; Verga, Nicolae; Khan, Fahad Shahbaz (2022-10-12). "Multimodal Multi-Head Convolutional Attention with Various Kernel Sizes for Medical Image Super-Resolution". arXiv:2204.04218 [eess.IV].

- Neil Rhodes (2021). CS 152 NN—27: Attention: Keys, Queries, & Values. Event occurs at 06:30. Retrieved 2021-12-22.

- Alfredo Canziani & Yann Lecun (2021). NYU Deep Learning course, Spring 2020. Event occurs at 05:30. Retrieved 2021-12-22.

- Alfredo Canziani & Yann Lecun (2021). NYU Deep Learning course, Spring 2020. Event occurs at 20:15. Retrieved 2021-12-22.

- Robertson, Sean. "NLP From Scratch: Translation With a Sequence To Sequence Network and Attention". pytorch.org. Retrieved 2021-12-22.

- "Self -attention in NLP". GeeksforGeeks. 2020-09-04. Retrieved 2023-04-30.

External links

- Dan Jurafsky and James H. Martin (2022) Speech and Language Processing (3rd ed. draft, January 2022), ch. 10.4 Attention and ch. 9.7 Self-Attention Networks: Transformers

- Alex Graves (4 May 2020), Attention and Memory in Deep Learning (video lecture), DeepMind / UCL, via YouTube

- Rasa Algorithm Whiteboard - Attention via YouTube